AI-Powered Customer Support Platform

Product Manager, AI

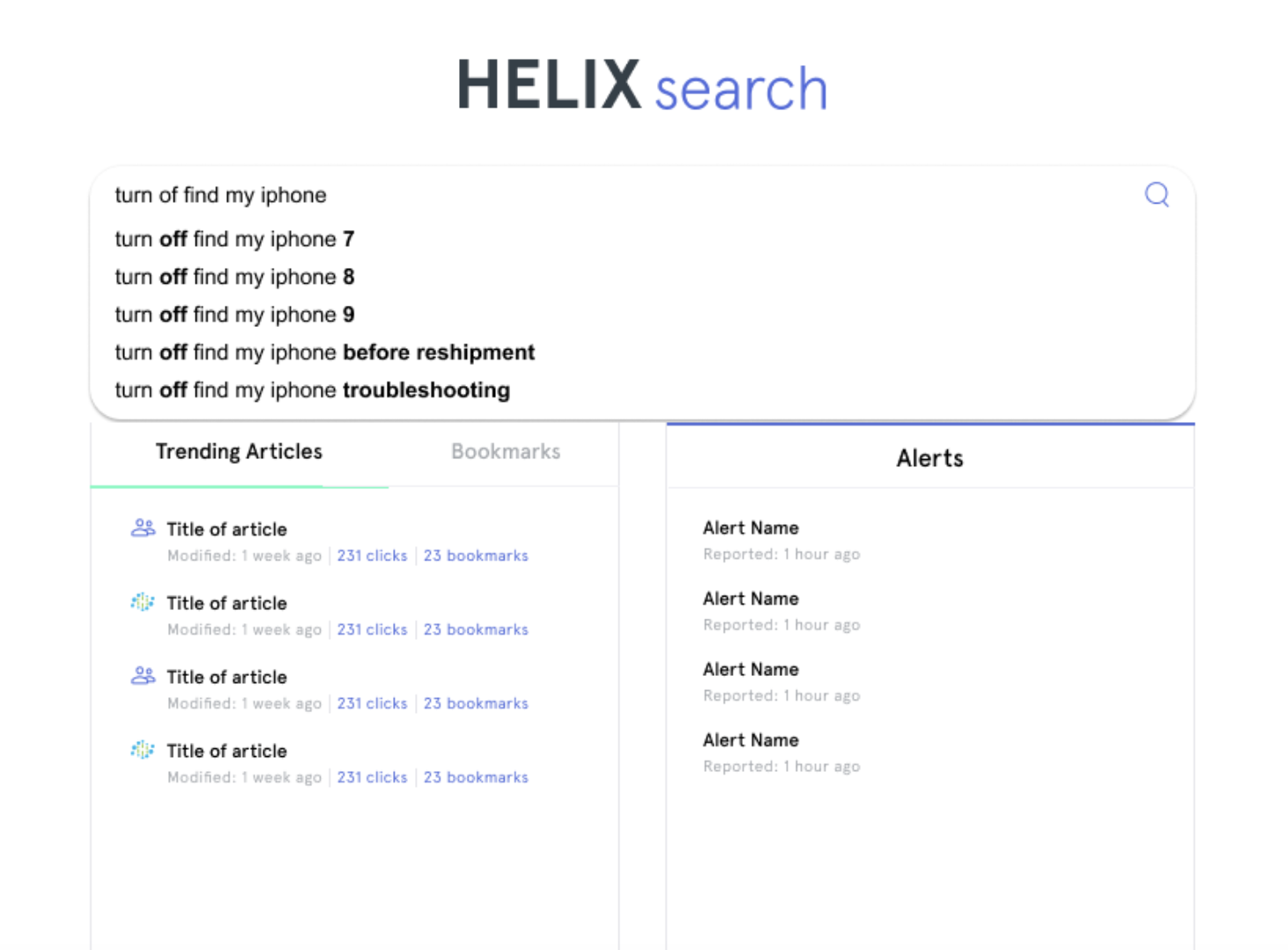

Redesigned a knowledge base search engine with AI to reduce costs and improve help center accuracy.

The Story

When we first started talking about improving internal support tools, there was interest but also hesitation. AI was not something the operations teams were planning for, and the path to get this project off the ground took time. I spent months aligning stakeholders across call centers, operations, compliance, and engineering. There were a lot of approvals, questions, and roadblocks to work through before we could move forward.

Once we had the green light, we got to work. I kicked off discovery by interviewing dozens of support agents and shadowing their workflows. We watched how they used the existing tools and where things broke down. One pattern stood out right away. Most agents were asking full questions in the search tool, but the system only worked for keywords. That mismatch was costing time and creating frustration.

We rebuilt the search experience from the inside out. I partnered with data science to define the model scope and performance metrics. Then I worked with our product and engineering teams to figure out how the models would integrate into the broader app. I collaborated closely with our UX designer to bring the new capabilities into the interface in a way that felt familiar, fast, and helpful.

Rollout was slow by design. We deployed team by team across five different call centers, tracking performance, collecting feedback, and improving as we went. As the tool proved itself, momentum built quickly. Agents loved the faster results, managers saw improved efficiency, and executives began to take notice.

We kept going. We released updates that improved autocomplete and surfaced smarter related articles. The system started adapting to usage patterns, and the experience kept getting better. What started as a focused improvement turned into a much larger shift. The project gave our team credibility, and it opened the door for more AI initiatives across operations.